Settings

General Parameters

Common setting (as of ver 2.3.4) can be found in Settings > General Parameters

| Location | Field | Name | Description | Unit | Default | Range |

|---|---|---|---|---|---|---|

| Common | Output | Publish Point-Cloud | The level of publishing point cloud to output port. This setting will greatly increase the bandwidth between the algo nodes and the master node! - 0: Nothing published - 1: Only object point published - 2: Both object & background points published - 3: All points (including out of detection range ones) published | int | 1 | |

| Background Points Update Interval | Time between updates of background and ground point cloud information from algo nodes to the master node. Updating frequently can be resource-intensive.. | seconds | 1.0 | |||

| Sensor Status Update Interval | Time between updates of sensor information from algo nodes to the master node. | seconds | 0.5 | |||

| Output Sending Type Selection | Method for sending and updating new results. - 0: Instant Sending: Publish result whenever a new one is available. - 1: Fixed-Period Sending: Publish the most recent result periodically. The published result might already have been published in a previous frame. - 2: Fixed-Period with Max-Delay: Publish the most recent result periodically. If there is no new result, the sending is postponed until a new result is received or a maximum delay time has passed. | int | 1 | |||

| Update Interval | Publish interval for output sending type 1 - Fixed-Period Sending and type 2 - Fixed-Period with Max-Delay | seconds | 0.1 | |||

| Point-Cloud Bandwidth Reduction | This decreases the communication burden by reducing pointcloud data size. This option if enable will affects: - In calibration mode, only one point cloud will be sent (circularly) at a time. - In runtime mode, background and ground points will be downsampled - Points out of detection range will not be published regardless of the point cloud publishing level. | boolean | false | |||

| Downsampling Resolution | Resolution of downsampling method for pointcloud bandwidth reduction. Value can be between 0 to 1. | meters | 0.3 | |||

| Input | Maximum Lidar Input Interval | If there is no response from one of the lidar sensors for this time duration, that lidar sensor will be considered dead. | seconds | 7 | [0,30] | |

| Restart dead lidar drivers automatically | When this is enabled, lidar sensor driver will be restarted automatically if it does not response for duration specified in “Maximum Lidar Input Interval” | True/ False | True | |||

| Frame Update Setting | Method for updating new frames from received results - 0: Instant: Update frames as soon as results arrive - 1: Use buffer: Update frames using buffer that stores frames after specified delay time in case of network traffic | int | 0 | |||

| Minimum Pending Time (For Using Instant Update) | Due to connection issues, the time interval between two point-cloud messages of one lidar can be unreasonably small. This minimum interval will ensure that one lidar point-cloud message of the pipeline has a reasonable time gap with the next one. A message which time difference with the previous message is below this minimum interval will get discarded. (This option is only visible if "Instant" is selected in the Frame Update Method.) | seconds | 0.02 | [0, 0.1] | ||

| Maximum Pending Time (For Using Instant Update) | Perception pipeline will be triggered whenever data from all connected lidar sensors were received or at least new data from one lidar sensor arrived and this time threshold is reached. (This option is only visible if "Instant" is selected in the Frame Update Method.) | seconds | 0.15 | [0.1, 1.0] | ||

| Number of Frames Operating Delayed (For Using Buffer Update) | Number of frames operating behind the current time. The frame duration is specified by the frame interval parameter. | 3 | [1, +inf] | |||

| Frame Interval [s] (For Using Buffer Update) | Perception pipeline will be triggered with new input according to the frame interval. | 0.15 | [0.01, +inf] | |||

| Retro-Reflection Tracking | Enable Retro-Reflection Tracking | Enables marking of objects as retro-reflective. For this to work properly the retro-reflectivity threshold setting for each lidar also needs to be tuned properly. | False | |||

| Min Point Count | For an object to be considered retro-reflective it has to have at least this many retro-reflective points. | int | 5 | [1, +inf] | ||

| History Threshold | The number of frames an object will stay retro-reflective after classified as retro-reflective.. | int | 10 | [0, +inf] | ||

| Reflection Filter | Use Simple Filter [2D] | Enable simple filtering algorithm for faster but less accurate filtering. The height will not be considered. | True | |||

| Use detection limit | If enabled, any sensor further from the reflection filter than the detection limit will not be considered during filtering. A smaller detection limit will make filtering faster. | True | ||||

| Detection limit [m] | Detection range of the option "Use detection limit". | meters | 50.0 | [0, +inf] | ||

| Zone Setting | Trigger Zone Events with MISC Objects | Enabling this will trigger zone events with MISC objects. | True/ False | False | ||

| Zone Filter Resolution [m] | Grid Cell Size for Zone Filter. | meters | 0.1 | [0, +inf] | ||

| Tracking History | Tracking History Length | The number of history positions to keep | int | 100 | [0, +Inf.] | |

| History Smoothing Level | Trajectory smoothing level | int | 3 | [0, 3] | ||

| Trajectory Prediction | Number of Prediction Steps | Number of prediction steps | int | 10 | [0, +Inf.] | |

| Prediction Step Time-Horizon | Time step between two predicted points | seconds | 0.1 | [0, +Inf.] | ||

| Max. Acceleration Filter Threshold | Maximum acceleration for prediction | m/s2 | 15.0 | [0, +Inf.] |

Master Node Parameters

Master setting(as of ver 2.3.4) can be found in Settings > Master Node Parameters

| Location | Name | Description | Unit | Default | Range |

|---|---|---|---|---|---|

| Output-Merger > Algo-Node Result Timeouts | Algo-Node Object Result-Timeout | If a point cloud receival time of a result from an algo node and the latest receival time differ by more than this timeout, the algo node result is removed from result aggregation. For the point result, the point-cloud update frequency is added to this timeout. For the sensor statuses, the sensor update frequency is added to this timeout. | seconds | 0.2 | [0.1, +Inf.] |

| Algo-Node Point Result Timeout | - | seconds | 2.0 | [0.1, +Inf.] | |

| Algo-Node Sensor Status Timeout | - | seconds | 2.0 | [0.1, +Inf.] | |

| Output-Merger > Multi Algo-Node Merger | Association Distance Threshold | Distance threshold to associate objects in two nodes | meters | 2.0 | [0, +Inf.] |

Algo Node Parameters

To change algorithm settings you can open the algorithm window (with the Settings > Algo Node Parameters> Algorithm (ip_address) in the menu bar). A description of the parameter specification and a more detail of how to tune the parameter can be seen below. Be advised to change algorithm parameters with caution, as wrong parameters can negatively affect the performance.

| Location | Field | Name | Description | Unit | Range |

|---|---|---|---|---|---|

| Parameters > Object | - | Allow Floating-Object | If True, min. z of the object is set to be the min. z-value of the object bounding box instead of ground height. | True/ False | |

| Tracked Object's Min. Points | The minimum number of points of an object to be tracked. | [0, +Inf.] | |||

| Tracked Object's Min Radius | Minimum object radius to be tracked. | meters | |||

| Parameters > Tracking | - | Validation Period | Period of checking validity in the early stage of tracking. This time determines length of the VALIDATION period, or how quickly a new object will be tracked and classified. | seconds | [0, +Inf.] |

| Invalidation Period | Period of short term prediction when tracking is lost while in the VALIDATION period. A longer period will allow for objects that are at the edge of detection range to be tracked, but will be more likely to introduce false alarms. | seconds | [0, +Inf.] | ||

| Drifting Period | Period of short term location prediction when tracking is lost in TRACKING status. A longer period can help with obstructions, but can lead to object ID switching in busy environments | seconds | [0, +Inf.] | ||

| Drifting Period for Miscellaneous Objects | Drifting period apply for Miscellaneous Objects. | seconds | [0, +Inf.] | ||

| Algorithm Componenets > Ground Detector | Elevation Ground Detector | Apply | If True, use the ground detector. | True/ False | |

| Max. Ground Height | Maximum height for ground segmentation. | meters | [-0.5, +Inf.] | ||

| Limit Detection Range | To limit the ground detection range (xy-range). If enable, all points out of certain xy-range will not be considered as ground points. Note that it's not applied for z detection range. | True/ False | |||

| Limit Detection Range > Max Detection Range | Maximum xy-range to apply ground detection. | meters | [0, +Inf.] | ||

| Algorithm Componenets > Background Detector | Background Detector | Apply | If True, use the background detector. | True/ False | |

| Time to Initialize Background | Initiated period to learn background points. | seconds | [0, +Inf.] | ||

| Time to Become Background | The period for static objects becomes background. | seconds | [1, +Inf.] | ||

| Time to Become Foreground | The period for a background object becomes foreground when it starts moving. | seconds | [1, +Inf.] | ||

| Use Multi-lidar Background Fusion | Fuse background information from multi-lidar | True/False | |||

| Number of Frames To Estimate Detection Range | Number of initial frames used to estimate the background detection range | [1, +Inf] | |||

| Use Global Detection Range | If use this option, the global detection range will be used for background detection range instead of using initial frames estimation | True/False | |||

| Resolutions (Range, Azimuth, Elevation) | Range (distance), Azimuth angle and Elevation angle resolution. | meters, degree, degree | [0, +Inf] | ||

| Algorithm Componenets > Clusterer | Grid-Clusterer | x-Resolution | Grid resolution in x-axis. | meters | (0, +Inf.] |

| y-Resolution | Grid resolution in y-axis. | meters | (0, +Inf] | ||

| Cell Point Threshold | Minimum points per cell for clustering | [1, +Inf.] | |||

| Point-Size Growing > Enable Point-Size Growing | To allow using bigger clustering resolution to far-distant objects. | True/ False | |||

| Point-Size Growing > Point-Size Scaling Factor per Meter | The scaling factor of point size's radius per metter with respect to the distance to lidar. | meters | |||

| Point-Size Growing > Max. x-Resolution | Max grid resolution in x-axis when using Point-Size Growing. | meters | [0, +inf] | ||

| Point-Size Growing > Max. y-Resolution | Max grid resolution in y-axis when using Point-Size Growing. | meters | [0, +inf] | ||

| Algorithm Componenets > Tracker | Hybrid-Tracker | Apply | If true, use Hybrid-Tracker | True/ False | |

| Association-Distance | Distance threshold to associate objects in two consecutive frames. | meters | [0.01, +Inf] | ||

| Merge Object Level | Object merging level to decide how easily small closed objects can be merged into one object. | [0, 10] | |||

| Max. Grow-Size Tolerance | Maximum size of merged object can grow | meters | [1, +inf] | ||

| Max-Dimensions (W, L) | Maximum size of object can be tracked. | meters | [0.01, +inf] | ||

| Human-Tracker | Apply | If true, use Human-Tracker | True/ False | ||

| Association-Distance | Distance threshold to associate objects in two consecutive frames. | meters | [0.01, +Inf] | ||

| Merge/Split Size Mul. Threshold | Size ratio threshold that allows to merge/split | [0.0, +Inf] | |||

| Merge/Split Size Add. Threshold | Size increasing/decreasing threshold that allows to merge/split | meters | [0.0, +Inf] | ||

| Min. Time for Splitting | Minimum lifetime of objects to apply merger/ splitter check | seconds | [0.0, +Inf] | ||

| GPU Pipeline Supported Tracker (GPU plug-in) | Apply | If True, use GPU Pipeline Supported Tracker | True/ False | ||

| Tracking Association Distance | Distance threshold to associate objects in two consecutive frames. | meter | [0.0, +Inf] | ||

| Tracking Association Distance For Non-Tracking Status Objects | Distance threshold to associate objects in two consecutive frames for non-tracking status objects. | meter | [0.0, +Inf] | ||

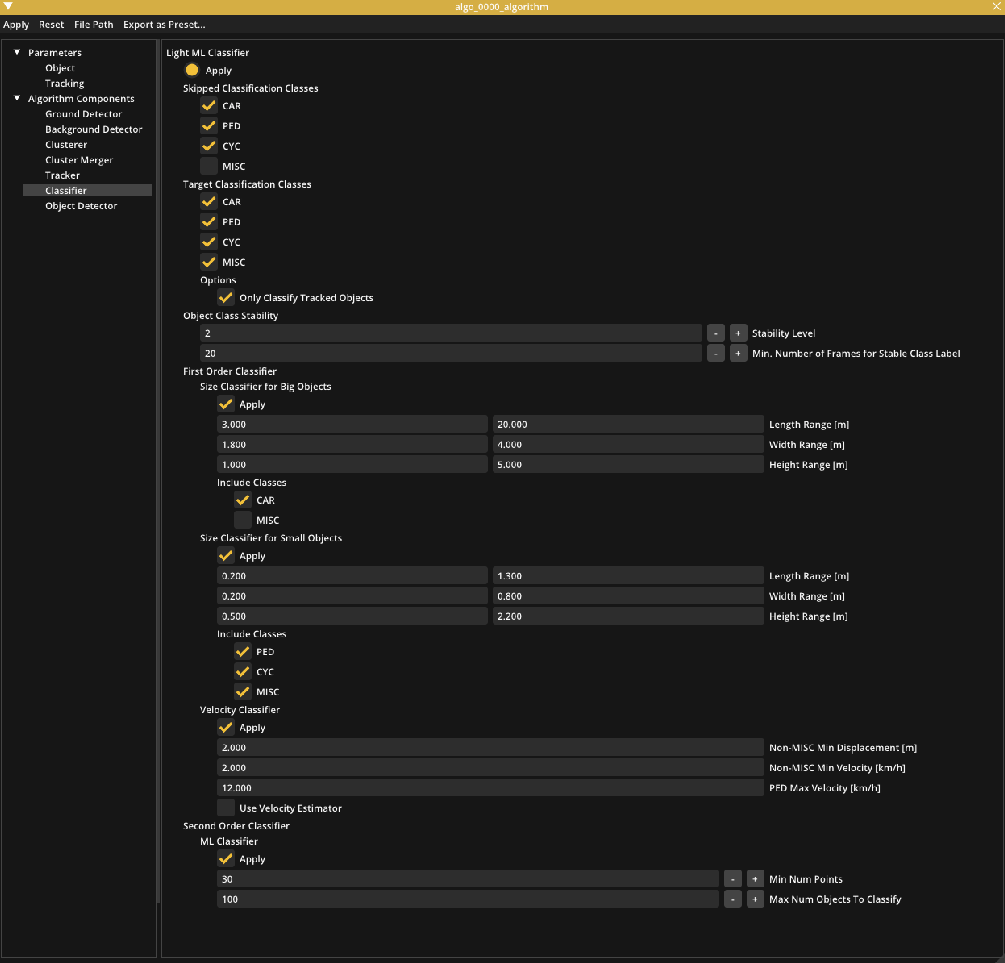

| Algorithm Componenets > Classifier | Light ML Classifier | Apply | If True, use Light ML Classifier | True/ False | |

| Target Classification Classes | CAR/PED/CYC/MISC | The classes the output of the classifier will include. E.g. if only PED(estrian) and MISC(ellaneous) are chosen all the objects will be classified as either PED or MISC. | True/ False | ||

| Only Classify Tracked Objects | If selected, only objects with tracking status will be classified | True/False | |||

| Skipped Classification Classes | CAR/PED/CYC/MISC | Any class marked with this option will be ignored by the classifier. | True/ False | ||

| Object Class Stability > Stability Level | Classification stability level: 0: Disabled, 1: If an object class is stable, the classification will be kept until replaced by another stable class, 2: If an object class is stable, the classification will be kept permanently. | ||||

| Object Class Stability > Min. Number of Frames for Stable Class Label | The number of frames an object class need to be continuously kept in order to turn to semi-permanently (for Stability Level = 1) or permanently (for Stability Level = 2). | ||||

| First Order Classifier | This session will be applied first and only if it can’t classify the objects the send order classifier can assist it. | ||||

| Size Classifier for Big Objects | Apply | If True, use the size classifier for big objects. If used, all objects which have size bigger than Min Length, Width, Height will be classified as one of Include Classes. | True/ False | ||

| Length Range/ Width Range/ Height Range | The range of length, width, height to classify as big objects. | meters | [0, +Inf] | ||

| Include Classes | The classes the output of the size classifier for big objects will include. | True/ False | |||

| Size Classifier for Small Objects | Apply | If True, use the size classifier for small objects. If used, all objects which have size in the Length Range, Width Range, Height Range will be classified as one of Include Classes. If an object is classified as a big object, it won’t be classified as a small object even though it’s in the small object range. | True/ False | ||

| Length Range/ Width Range/ Height Range | The range of length, width, height to classify as small objects. | meters | [0, +Inf] | ||

| Include Classes | If True, the classes the output of the size classifier for big objects will include. | True/ False | |||

| Velocity Classifier | Apply | If True, use the velocity classifier. | True/ False | ||

| Non-MISC Min Displacement | Minimum displacement which object will not be classified as a MISC. | meters | [0, +Inf] | ||

| Non-MISC Min Velocity | Minimum velocity which object will not be classified as a MISC. | km/h | [0, +Inf] | ||

| PED Max Velocity | Minimum velocity which object will not be classified as a PED. | km/h | [0, +Inf] | ||

| Use Velocity Estimator | Enable this to use the velocity estimator | True/ False | |||

| Second Order Classifier > ML Classifier | Apply | If True, use the machine learning classifier. If applied, it will be used only after using size classifier and velocity classifier. E.g, After the size classifier and velocity classifier, the object is classified as either PED or CYC, ML classifier will decide whether it’s a PED or a CYC. | True/ False | ||

| Min Num Points | Minimum number of points to use ML classifier. | [0, +inf] | |||

| Max Num Objects To Classify | Maximum number of objects can be classified by ML classier. | [0, +inf] | |||

| Algorithm Componenets > Cluster Merger (GPU plug-in) | Cluster Merger | Apply | If True, enables Cluster Merger. | True/ False | |

| Algorithm Componenets > Object Detector (GPU plug-in) | 2D Object Detector | Apply | If True, enables the 2D Object Detector | True/ False | |

| Car Probability Threshold | Minimum probability to be considered CAR | [0.0, 1.0] | |||

| Pedestrian Probability Threshold | Minimum probability to be considered PED | [0.0, 1.0] | |||

| Cyclist Probability Threshold | Minimum probability to be considered CYC | [0.0, 1.0] | |||

| Exclude Invalid Points | If selected, points in an exclusion zone will not be included in the object detection model. | True/ False | |||

| Limit Detection Range > Enable | This to allow object detection cover in a smaller area compared to the global detection range model. | True/ False | |||

| Limit Detection Range > Max Detection Range | Maximum range to apply object detection. | meters | [0, +Inf] |

More on algorithm setting

Detection range

It is recommended to change some parameters to better fit each specific use case. As mentioned in previous sections, detection range is one of the important parameters that should be tuned carefully to save computational resources, reduce noise and false detections (refer to Project Setup, Optimizing the world size). If you want to change detection range while in runtime mode, go back to project setup mode (with the Mode > Project Setup in the menu bar) and click the gear icon Algo Node Settings.

Background Detector and Zone

Background detector is used to automatically set exclusion zones (called background zones) to ignore static objects in the detection pipeline. If an object stays still for more than Time to Become background seconds, the background detector will set a background zone around that object. In consequence, that object will become background and won’t be tracked. If a background object moves away from its background zone, it needs Time to Become Foreground seconds so that the zone is removed. Since lidar is noisy and some objects can vibrate (vegetations, flags, ...), the background detector can’t completely remove all the static objects. It’s recommended to use exclusion zones (see exclusion zones) for the region which you don’t want to cover.

If you also want tracked objects which can be static for a long time (e.g. track cars in the parking lot), you could use static zones (background detection is not applied to static zones) or disable Background Detector and use only exclusion zones instead.

Tracker

Non-GPU pipeline

Human-tracker is more optimized for human only tracking applications. If lidar is used in the scene which doesn’t have cars and cyclists (e.g. indoor), Human-tracker is recommended. If lidar is used outdoors (which have cars/cyclists), Hybrid-Tracker would perform better.

Since lidar point-clouds are sparse, a big car can break into multiple small objects. Hybrid-Tracker can merge those small objects together. The Merge Object Level parameters help to control this merging process. Merge Object Level indicates how easy the merge process is. If lidars are used to track big objects (big trucks/containers) and there aren't many people, increasing Merge Object Level can help to detect the big object better. But if the scene is a crowded intersection with many pedestrians those values need to be kept small to avoid merging closed distance pedestrians to one object. Merge Object Level can have value in the range [0, 10]. We recommend using Merge Object Level 3-4 for crowded intersections and 7-8 for highways with many big trucks cases.

GPU pipeline

With GPU pipeline, GPU Pipeline Supported Tracker is recommended for outdoor scene. For indoor or only human scene, Human-tracker is still recommended.

Classifier

Use Target Classification Classes can reduce false classification. E.g. If lidars are used on highways to detect cars/cyclists, it recommends choosing CAR, CYC, MISC as target classes. And if lidars are used indoors, choosing PED, MISC as target classes could perform better.

If only one target class is selected, then all objects are classified as the selected class without considering properties of the objects (i.e. the classification is skipped). In the case that no target class is selected, all objects are classified as MISC.

First order classifier (size based and velocity based) will be used first, and only if it can’t decide which class the object belongs to the second order classifier (machine learning based) will be applied. Let’s consider the following classifier setting and an object of size (4.5m, 2.7m, 1.5m).

The object is classified as either CAR or MISC by Size Classifier for big Objects. If it moves at a speed 20km/h, it is not a MISC or a PED by Velocity Classifier. So it will be classified as a CAR. In case it’s not moving, ML Classifier will be used to determine if it’s a CAR or a MISC.

Dealing with limited computational resources

If running with multiple lidars or using a computational-resource-limited machine, you can improve the speed of the algorithm by:

- Optimize the detection range (prefered to general parameter setting).

- Increase resolution values in

Pipeline > Clusterer. Resolution 0.1 x 0.1 means that points in a grid of size 0.1 x 0.1 will be grouped in one object. Increasing those values can help the clusterer run faster with the trade off that closed objects can be merged together. - Increase resolution values in

Pipeline > Background Detector > Range, Azimuth, Elevation. Increasing those values can help the Background Detector run faster with the trade off that objects which are closed to background can become background.

Rendering Config Editor

Rendering setting can be found in View > Preference (or press F11)

| Location | Name | Description | Default |

|---|---|---|---|

| Visibility | Object Points | Enable or disable showing points belonging to objects. | True |

| Ground Point | Enable or disable showing ground points. (e.g. floor.) | True | |

| Background Points | Enable or disable showing background points. (e.g. wall or desk…) | True | |

| Intensity Visual Mode | Enable this to color point-cloud by intensity values. Off: Intensity color visualization is off. Fixed Range: Use max and min intensity value from Range below. Auto Range: Calculate max and min intensity value from each frame. | 0: Off 1: Fixed Range 2: Auto Range | |

| Min/Max Point Intensity Range | Color range for intensity visualization. Intensity values outside of range will be clamped to the range. | [0.0, 255.0] | |

| Grid | Enable or disable showing squared Grid. | True | |

| Grid Circular | Enable or disable showing circular Grid. | False | |

| Axis | Enable or disable showing the world XYZ coordinate axis. | True | |

| Objects | Enable or disable showing tracked objects. (e.g. pedestrian) | True | |

| Misc Objects | Enable or disable showing miscellaneous objects. | True | |

| Drifting Objects | Enable or disable showing drifting objects. | True | |

| Invalidating Objects | Enable or disable showing invalidating objects. | True | |

| Validating Objects | Enable or disable showing validating objects. | True | |

| Object Trail | Enable or disable showing the object trail. | True | |

| Predicted Trajectory | Enable or disable showing the predicted trajectory of moving objects. | False | |

| Map Image | Enable or disable showing a map image. | True | |

| Detection Range | Enable or disable showing the detection range of each algo node. | True | |

| Lidar Name | Enable or disable showing the name of each sensor. | False | |

| Lidar Topic | Enable or disable showing the topic of each sensor. | False | |

| Algo Node Name | Enable or disable showing the name of each algo node. | False | |

| Zone Name | Enable or disable showing the name of each zone. | False | |

| Retro-Reflective Objects | Enable or disable showing the retro-reflective objects. | False | |

| Draw Annotations | Enable or disable drawing object annotations | False | |

| Visible Annotations -> Show Object ID | If object annotations are enabled the object’s ID is shown | True | |

| Visible Annotations -> Show Object Class and Probability | If object annotations are enabled the object’s class and probability score of that class is shown | False | |

| Visible Annotations -> Show Object Speed | If object annotations are enabled the object’s speed is shown | True | |

| Visible Annotations -> Show Object Height | If object annotations are enabled the object’s height is shown | False | |

| Color | Background Color | Color of the background. | - |

| Ground Point Color | Color of the ground points. | - | |

| Background Point Color | Color of the background points. | - | |

| Object Point Color | Color of points belonging to objects. | - | |

| Car Color | Color of cars. | - | |

| Pedestrian Color | Color of pedestrians. | - | |

| Cyclist Color | Color of cyclists. | - | |

| Misc Color | Color of miscellaneous objects. | - | |

| Event Zone Color | Color of event zones. | - | |

| Exclusion Zone Color | Color of exclusion zones. | - | |

| Reflection Zone Color | Color of reflection zones. | - | |

| Static Zone Color | Color of static zones. | - | |

| Detection Range Color | Color of the detection range outline. | - | |

| Retro-Reflective Object Color | Color of the retro-reflective object | - | |

| Miscellaneous | Cloud Point Size | Control the visual size of the rendered points. The values can range from 1.0 to 10.0. | 1.0 |

| Use Random Obj. Color | This option colors each object in a different color. | False | |

| Grid Interval [m] | Display grid size. | 10 | |

| View Range | Z Clipping Range Limits [m] | Display Z-range. | [-10.0, 10.0] |

Export Configuration

SENSR can export the configuration changes the user has made into a human-readable format. This is useful for manually checking a project's settings against the settings SENSR uses by default.

To export the configuration changes go to sensor setup mode and in the top menu-bar navigate to File->Export Parameter change Report. This will bring up a dialog-box to choose where to export the text-file.